Agentic AI

AI Agent QA & Testing Framework: Ensuring Reliability Before Going Live

AI agents don't just answer questions, they make decisions and take actions that impact real business outcomes. A single wrong API call can trigger refunds, delete customer data, or violate compliance rules. Production failures cost far more than development mistakes. This guide shares a complete AI Agent Testing Framework that ensures reliability before launch.

Why AI Agent QA Is Critical Before Production Deployment

AI agents control workflows that touch customer data, financial systems, code deployments, and compliance processes. When they fail, consequences cascade quickly. Wrong tool calls hit production APIs. Faulty reasoning escalates wrong tickets or blocks valid deployments. Bad decisions create compliance violations that trigger audits.

Traditional software testing misses these risks. Static tests work for predictable code but fail against probabilistic AI behavior. Agents evolve with new data and feedback, breaking tests designed for fixed logic. Production-grade AI agents need specialized QA frameworks built for their unique failure modes.

How AI Agent QA Differs from Traditional Software & AI Testing

Traditional QA vs AI Agent QA

Regular software produces identical outputs from identical inputs. AI agents show probabilistic behavior, same prompt might generate slightly different reasoning paths. Traditional tests expect exact matches. Agent tests validate within acceptable ranges.

Static code analysis finds syntax errors. AI agents use dynamic logic that evolves. Tests must simulate real-world scenarios, not just syntax checks. Human-in-the-loop validation becomes mandatory for high-risk actions.

Why LLM Accuracy ≠ Agent Reliability

A language model scoring 95% on benchmarks can still fail catastrophically in production. Hallucinations create wrong tool calls. Context loss midway through workflows produces incomplete actions. Multi-step reasoning compounds small errors into complete failures.

Model accuracy measures single responses. Agent reliability measures end-to-end workflow success. An agent calling the right API with wrong parameters fails completely, even if the model "understands" the question perfectly.

AI agent development for SaaS, built for scale, security, and real-world workflows.

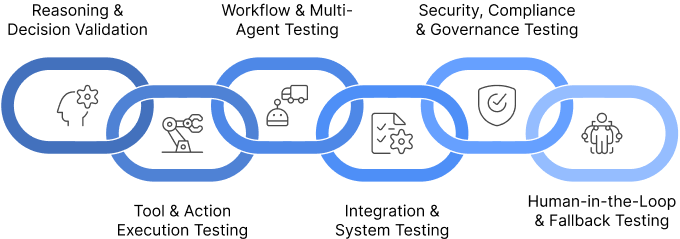

Get in TouchThe AI Agent QA & Testing Framework (End-to-End)

This six-layer framework catches failures before they reach production. Each layer validates specific risks while building toward complete system reliability.

Layer 1 – Reasoning & Decision Validation

Test prompts across normal, edge-case, and adversarial scenarios. Chain-of-thought validation checks if reasoning steps make logical sense. Scenario simulations cover conflicting data, incomplete information, ambiguous goals.

Example: Support agent receives "refund expensive purchase" from VIP customer. Tests validate it checks contract terms, billing history, and escalation rules before acting, not just generating refund text.

Layer 2 – Tool & Action Execution Testing

Mock all external APIs to test call correctness, parameter validation, permission boundaries. Failure injection tests retry logic, timeout handling, graceful degradation. Idempotency checks ensure repeated calls produce identical safe results.

Example: Billing API agent test injects 500 errors. Agent must retry three times, then escalate with full context, not crash or spam the endpoint.

Layer 3 – Workflow & Multi-Agent Testing

End-to-end workflow tests simulate complete user journeys. Multi-agent scenarios test handoffs, shared context, failure propagation. State consistency validation ensures memory persists correctly across sessions.

Example: Product feedback to prioritization to PRD workflow. Prioritization agent must receive complete context from feedback agent, including customer quotes and frequency data.

Layer 4 – Integration & System Testing

Live integration tests hit staging environments for CRM, billing, support systems. Data integrity validation confirms no corruption during agent processing. Latency testing ensures sub-3-second responses at peak load.

Example: Support-to-CRM agent test creates real Zendesk tickets, validates Salesforce records match exactly, confirms no duplicate entries or missing fields.

Layer 5 – Security, Compliance & Governance Testing

Role-based access validation confirms agents respect permission boundaries. SOC 2 readiness tests generate complete audit trails. Prompt injection defence simulates malicious inputs. Data residency validation ensures compliance with regional laws.

Example: Security agent test attempts unauthorized database writes, system must block cleanly while logging the violation attempt completely.

Layer 6 – Human-in-the-Loop & Fallback Testing

Escalation scenario testing confirms handoffs include complete context. Approval workflow validation tests one-click approve/reject flows. Fail-safe testing simulates total agent failure, system must route to human without data loss.

Example: High-value refund request correctly pauses for PM approval with full reasoning trail, customer history, and recommended action displayed.

QA Metrics That Actually Matter for AI Agents

- Task success rate measures complete workflows, not individual responses. 92% success means 92% of support tickets resolve correctly from start to finish.

- Decision accuracy tracks reasoning correctness against ground truth scenarios. Action correctness validates tool calls hit right endpoints with correct parameters.

- Latency percentiles matter more than averages, 95th percentile under 3 seconds prevents user frustration. Escalation frequency shows human dependency (target under 15%).

- Drift detection signals alert when performance drops 5% week-over-week. Cost per workflow tracks token efficiency (target under $0.05 for support agents).

- Accuracy alone misleads. A 98% accurate model calling wrong APIs fails completely. Prioritize end-to-end metrics over component scores.

Common QA Mistakes SaaS Teams Make with AI Agents

- Teams test prompts in isolation, missing integration failures. Prompt A works perfectly until paired with Tool B's unexpected error response.

- Skipping integration testing deploys agents that crash against production APIs. No production monitoring means silent failures go undetected for weeks.

- Teams ignore adversarial testing, agents handle normal cases perfectly but fail against malicious inputs or edge cases. No rollback strategy leaves broken agents running until manual intervention.

- Most critical: testing only happy paths. Real workflows hit errors, timeouts, permission denied constantly. Agents must handle production reality, not demo scenarios.

When to Build a Custom AI Agent Testing Framework

Off-the-shelf LLM evaluation tools measure model accuracy, not agent reliability. They miss tool failures, workflow breakdowns, integration issues completely.

Custom QA becomes essential for enterprise SaaS, regulated industries (fintech, healthcare), and multi-agent systems. Complex workflows demand end-to-end testing that generic tools can't provide.

Build when agents touch proprietary data, compliance rules, or multi-tool orchestration. Your unique pricing model, churn signals, customer segmentation require custom validation logic that off-the-shelf can't match.

Move beyond the basics, and make your tech stack make your work easier without adding any overheads. Contact us today.

Your email address will not be published. Required fields are marked *